Point Light Attenuation

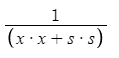

In real world, lighting attenuation is governed by inverse-square law:

However, in computer graphics things are a little trickier. Inverse-square law works for real lights because they have area, hence the projection of this area on receiver geometry gets exponentially smaller with distance. Point lights are an approximation and using the law on them produces one obvious problem: as distance approaches zero, lighting intensity approaches infinity.

There are multiple ways to solve it.

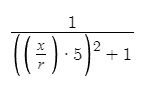

Standard Unity rendering pipeline uses a completely fake falloff curve that looks similar to this:

r = Light Range

This is an arbitrary hand-tweaked function. It has a nice property of being exactly 1 in the center, but its falloff is basically always locked and distance-independent. It simply scales the same gradient based on Range parameter. That allowed Unity to even store it inside a texture. A 8-bit texture unfortunately, that also lacks precision and produces visible banding when rendering with gamma correction.

Bakery's "physical falloff" defaulted to a different formula:

This function tries to combine best of both worlds, by having 1 at center and falling off exponentially. A similar function is also used in UE4 (you need access to UE4 github repository to open that link).

However, the "1" constant in this case is arbitrary and will look different depending on scene size/units.

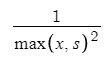

Frostbite and HDRP use almost unfiltered inverse-square falloff, but clamp it to prevent dividing by zero:

s = Light Size

In both of these renderers, light size is hardcoded to be 0.01 (1cm), which means brightest value of the untinted light is 10000.

Today Bakery has a new option called "Falloff min size". This is exactly the Light Size used in HDRP/Frostbite formula. With this parameter, new Bakery falloff looks like that: